Note: this is a transliteration of the source code into English. It is very out-of-date. Please read the code comments instead, starting here: https://github.com/xapi-project/xen-api/blob/25487aa89476026b9f9bdf5a196710a0d61e808b/ocaml/xapi/message_forwarding.ml#L1188

- Xen Gpl Pv Driver Developers Network Setup

- Xen Gpl Pv Driver Developers Network Connect

- Xen Gpl Pv Driver Developers Network Install

- Xen Gpl Pv Driver Developers Network Download

VM Startup

This page documents the current sequence of steps that take place when a VM is started.

Note that it is only the code-path for successful VM starts which is documented here. In some cases, failure paths involve attempts to reverse previous steps.

On the pool master

Xen Gpl Pv Driver Developers Network Setup

http://xenbits.xen.org/xapi/xen-api.hg?file/25e9038fce00/ocaml/xapi/message_forwarding.mlin function VM.start

- Contact: xen-devel@lists.xen.org Description: The max outstanding amount of data the it can have is 898kB (64K of data use 18 slot, out of 256. 256 / 18 = 14, 14. 64KB). This can be expanded by having multi-page to expand the ring.

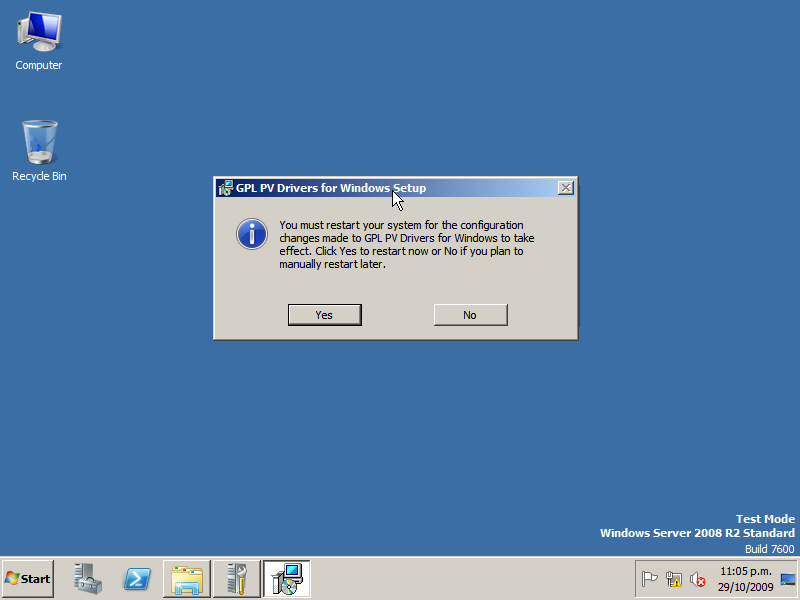

- To install a driver on your target system, unpack the tarball, then navigate to either the x86 or x64 subdirectory (whichever is appropriate), and execute the copy of dpinst.exe you find there with Administrator privilege. More information can be found here. You can also get the drivers via https://xenbits.xenproject.org/pvdrivers/win/9.0.0/.

Stability fixes to make AWS PV drivers more resilient. Added support for Windows Server 2016. Stability fixes for all supported Windows OS versions.AWS PV driver version 7.4.3's signature expires on March 29, 2019. We recommend updating to the latest AWS PV driver.

Xen Gpl Pv Driver Developers Network Connect

- Mark the VM as currently being started (with_vm_operation).

- Check that the start operation is valid on this VM (Xapi_vm_lifecycle.assert_operation_valid).

- Add the start operation to the VM's current-operations (Db.VM.add_to_current_operations).

- Update the VM's allowed operations (Xapi_vm_lifecycle.update_allowed_operations).

- Mark the VM's VBDs as currently being attached (with_vbds_marked). On each VBD:

- Check that the attach operation is valid on this VBD (Xapi_vbd_helpers.assert_operation_valid).

- Add the attach operation to the VBD's current-operations (Db.VBD.add_to_current_operations).

- Update the VBD's allowed operations (Xapi_vbd_helpers.update_allowed_operations).

- Mark the VM's VIFs as currently being attached (with_vifs_marked). On each VIF:

- Check that the attach operation is valid on this VIF (Xapi_vif_helpers.assert_operation_valid).

- Add the attach operation to the VIF's current-operations (Db.VIF.add_to_current_operations).

- Update the VIF's allowed operations (Xapi_vif_helpers.update_allowed_operations).

- Compute the 'memory overhead' of the VM so we know how much memory we need on a target host

- Find a suitable host

- Either consult the 'Workload balancing' service (interface in workload_balancing.ml)

- Or use the default logic in Xapi_vm_helpers.choose_host_for_vm:

- Iterate through hosts, starting with the host the VM has affinity to (if any), then the other hosts in a random order (from a distribution biassed towards hosts with more memory) until one is found on which the VM can boot (Xapi_vm_helpers.choose_host_for_vm_no_wlb):

- Check that the VM has an appropriate number of VCPUs (validate_vcpus).

- Check that the VM's memory constraints are valid (validate_memory).

- Check that the VM's shadow multiplier is valid (validate_shadow_multiplier).

- Check that the VM can perform 'after-crash' actions (validate_actions_after_crash).

- Check that the host is live (assert_host_is_live).

- Check that the host is enabled (assert_host_is_enabled).

- Check that the host can see the SRs for the VM's VDIs (and the VM's suspend-VDI if it's suspended) (assert_can_see_SRs).

- Check that the host has a PIF for the networks for the VM's VIFs.

- Check that if the VM wants to boot HVM then the host is HVM-compatible.

- Check that the host has enough memory for the VM.

- Check that using this host preserves the HA plan (Xapi_ha_vm_failover.assert_vm_placement_preserves_ha_plan).

- Allocate the VM to the chosen host (allocate_vm_to_host).

- Set the VM's last-booted record (Helpers.set_boot_record).

- Set the VM as 'scheduled_to_be_resident_on' the chosen host (Db.VM.set_scheduled_to_be_resident_on).

- Execute the vm-start operation on the host (see below).

- Once the operation is complete:

- Remove the vm-start operation from the host's current-operations (Db.Host.remove_from_current_operations).

- Update the host's allowed operations (Xapi_host_helpers.update_allowed_operations).

- Unset the VM's 'scheduled_to_be_resident_on' field.

- Mark the VM's VIFs as currently being attached (with_vifs_marked). On each VIF:

- Remove the attach operation from the VIF's current-operations (Db.VIF.add_to_current_operations).

- Update the VIF's allowed operations (Xapi_vif_helpers.update_allowed_operations).

- Mark the VM's VBDs as currently being attached (with_vbds_marked). On each VBD:

- Remove the attach operation from the VBD's current-operations (Db.VBD.add_to_current_operations).

- Update the VBD's allowed operations (Xapi_vbd_helpers.update_allowed_operations).

- Mark the VM as no longer currently being started (with_vm_operation).

- Remove the start operation from the VM's current-operations (Db.VM.add_to_current_operations).

- Update the VM's allowed operations (Xapi_vm_lifecycle.update_allowed_operations).

- Update the VM's VBDs' allowed operations (update_vbd_operations).

- Update the VM's VIFs' allowed operations (update_vif_operations).

- Create a message saying that the VM was started.

- Push the RRD to the host (Monitor_rrds.push_rrd).

On the pool slave

On the pool master

Xen Gpl Pv Driver Developers Network Setup

http://xenbits.xen.org/xapi/xen-api.hg?file/25e9038fce00/ocaml/xapi/message_forwarding.mlin function VM.start

- Contact: xen-devel@lists.xen.org Description: The max outstanding amount of data the it can have is 898kB (64K of data use 18 slot, out of 256. 256 / 18 = 14, 14. 64KB). This can be expanded by having multi-page to expand the ring.

- To install a driver on your target system, unpack the tarball, then navigate to either the x86 or x64 subdirectory (whichever is appropriate), and execute the copy of dpinst.exe you find there with Administrator privilege. More information can be found here. You can also get the drivers via https://xenbits.xenproject.org/pvdrivers/win/9.0.0/.

Stability fixes to make AWS PV drivers more resilient. Added support for Windows Server 2016. Stability fixes for all supported Windows OS versions.AWS PV driver version 7.4.3's signature expires on March 29, 2019. We recommend updating to the latest AWS PV driver.

Xen Gpl Pv Driver Developers Network Connect

- Mark the VM as currently being started (with_vm_operation).

- Check that the start operation is valid on this VM (Xapi_vm_lifecycle.assert_operation_valid).

- Add the start operation to the VM's current-operations (Db.VM.add_to_current_operations).

- Update the VM's allowed operations (Xapi_vm_lifecycle.update_allowed_operations).

- Mark the VM's VBDs as currently being attached (with_vbds_marked). On each VBD:

- Check that the attach operation is valid on this VBD (Xapi_vbd_helpers.assert_operation_valid).

- Add the attach operation to the VBD's current-operations (Db.VBD.add_to_current_operations).

- Update the VBD's allowed operations (Xapi_vbd_helpers.update_allowed_operations).

- Mark the VM's VIFs as currently being attached (with_vifs_marked). On each VIF:

- Check that the attach operation is valid on this VIF (Xapi_vif_helpers.assert_operation_valid).

- Add the attach operation to the VIF's current-operations (Db.VIF.add_to_current_operations).

- Update the VIF's allowed operations (Xapi_vif_helpers.update_allowed_operations).

- Compute the 'memory overhead' of the VM so we know how much memory we need on a target host

- Find a suitable host

- Either consult the 'Workload balancing' service (interface in workload_balancing.ml)

- Or use the default logic in Xapi_vm_helpers.choose_host_for_vm:

- Iterate through hosts, starting with the host the VM has affinity to (if any), then the other hosts in a random order (from a distribution biassed towards hosts with more memory) until one is found on which the VM can boot (Xapi_vm_helpers.choose_host_for_vm_no_wlb):

- Check that the VM has an appropriate number of VCPUs (validate_vcpus).

- Check that the VM's memory constraints are valid (validate_memory).

- Check that the VM's shadow multiplier is valid (validate_shadow_multiplier).

- Check that the VM can perform 'after-crash' actions (validate_actions_after_crash).

- Check that the host is live (assert_host_is_live).

- Check that the host is enabled (assert_host_is_enabled).

- Check that the host can see the SRs for the VM's VDIs (and the VM's suspend-VDI if it's suspended) (assert_can_see_SRs).

- Check that the host has a PIF for the networks for the VM's VIFs.

- Check that if the VM wants to boot HVM then the host is HVM-compatible.

- Check that the host has enough memory for the VM.

- Check that using this host preserves the HA plan (Xapi_ha_vm_failover.assert_vm_placement_preserves_ha_plan).

- Allocate the VM to the chosen host (allocate_vm_to_host).

- Set the VM's last-booted record (Helpers.set_boot_record).

- Set the VM as 'scheduled_to_be_resident_on' the chosen host (Db.VM.set_scheduled_to_be_resident_on).

- Execute the vm-start operation on the host (see below).

- Once the operation is complete:

- Remove the vm-start operation from the host's current-operations (Db.Host.remove_from_current_operations).

- Update the host's allowed operations (Xapi_host_helpers.update_allowed_operations).

- Unset the VM's 'scheduled_to_be_resident_on' field.

- Mark the VM's VIFs as currently being attached (with_vifs_marked). On each VIF:

- Remove the attach operation from the VIF's current-operations (Db.VIF.add_to_current_operations).

- Update the VIF's allowed operations (Xapi_vif_helpers.update_allowed_operations).

- Mark the VM's VBDs as currently being attached (with_vbds_marked). On each VBD:

- Remove the attach operation from the VBD's current-operations (Db.VBD.add_to_current_operations).

- Update the VBD's allowed operations (Xapi_vbd_helpers.update_allowed_operations).

- Mark the VM as no longer currently being started (with_vm_operation).

- Remove the start operation from the VM's current-operations (Db.VM.add_to_current_operations).

- Update the VM's allowed operations (Xapi_vm_lifecycle.update_allowed_operations).

- Update the VM's VBDs' allowed operations (update_vbd_operations).

- Update the VM's VIFs' allowed operations (update_vif_operations).

- Create a message saying that the VM was started.

- Push the RRD to the host (Monitor_rrds.push_rrd).

On the pool slave

Xen Gpl Pv Driver Developers Network Install

- Serialised with other host-local VM operations (in the vm_lifecycle_op queue), perform the following steps:

- Lock the VM. This prevents the background event thread noticing any domains or devices.

- Check that VM.power_state=Halted in the database

- Get the last_booted_record of the VM from the database (Helpers.get_boot_record).

- If VM.ha_restart_priority is set then set VM.ha_always_run to true in the database

- If the VM has no BIOS strings set then set 'generic whitebox' BIOS strings in the database

- Starts the VM in the paused state (Vmops.start_paused).

- Check VM parameters (Vmops.check_vm_parameter).

- Check that the VM has more than 0 and fewer than 64 VCPUs.

- Check that the device name of each of the VM's VBDs is unique.

- Check that the VM has more than a global minimum amount of memory (currently 16MiB)

- Check VM parameters (Vmops.check_vm_parameter).

- Get the list of VBDs which were marked by the message forwarding layer

- Ask the host memory ballooning daemon to reserve memory (greater than VM.dynamic_min, up to VM.dynamic_max)

- Verify that the host actually supports HVM, if this is an HVM domain

- Create an empty xen domain

- Add per-domain xenstore entries e.g. platform/ flags and BIOS strings. Note no devices are connected yet.

- Set the domain ID in the database.

- Clear all VBDs' and VIFs' 'currently-attached', 'status-code' and 'status-details' fields (Vmops.clear_all_device_status_fields).

- Check to make sure a VDI record exists for each VBD (Vbdops.check_vdi_exists).

- Set the machine address size, if it's specified in the VM's 'other-config' field.

- Choose a 'kernel' (Vmops.create_kernel).

- Either the domain is PV so we either use the VM.PV_kernel path in dom0 or we run pygrub to extract the kernel from the guest's filesystem

- Or the domain is HVM so we use hvmloader

- Call the domain builder in libxenguest to prepare the domain's memory image

- Also set vCPU -> pCPU affinity

- Also set vCPU scheduling parameters: weight and cap

- Also set the flags for NX, viridian, PAE, ACPI, APIC, ACPI S3, ACPI S4

- For each VDI, call the storage backend vdi_attach (and, if necessary, vdi_activate)

- Create xenstore entries for each configured vCPU (this enables PV guests to hotplug/unplug)

- Add VIFs (Vmops.create_vifs).

- Create the bridge for the VIF's network (Xapi_network.attach_internal).

- If the VIF is on the special 'Guest installer network', associate the DHCP lease to the VIF (Xapi_udhcpd.maybe_add_lease).

- Write netfront/netback entries to xenstore

- Wait for the add UEVENT to be processed by /etc/xensource/scripts/vif called from udev

- If the guest is PV, write pcifront/backend entries to xenstore for any configured PCI passthrough devices

- Mark the correct number of CPUs as initially online (NB only PV guests support hotplug)

- If guest is HVM, create a qemu-dm process. qemu-dm is told to emulate RTL8193 NICs for up to the first 8 VIFs, a USB tablet and a VNC framebuffer

- NB the commandline links the VIF MAC address, dom0 bridge name and dom0 tapX.Y device together. For each working VIF 2 interfaces end up on the bridge: one vifX.Y representing the PV drivers and one tapX.Y representing the emulated hardware

- NB qemu only listens on 127.0.0.1 for vnc. It writes the port number to xenstore and xapi is responsible for forwarding connections to it.

- If guest is PV, check if other-config:disable_pv_vnc is not true and run a vncterm process to export the console pty over VNC

- NB vncterm only listens on 127.0.0.1 for vnc. It writes the port number to xenstore and xapi is responsible for forwarding connections to it.

- NB If other-config:disable_pv_vnc=true, no vncterm copy is ran.

- Write the VM.memory_dynamic_min and VM.memory_dynamic_max to xenstore for the per-host ballooning daemon to use

- Update VM_metrcs.set_start_time in the database

- Set the VM's last-booted record (Helpers.set_boot_record).

- Delete any guest metrics associated with the VM in the database (Xapi_vm_helpers.delete_guest_metrics).

- Atomically clears VM.scheduled_to_be_resident_on and sets VM.resident_on

- If domain is to be started paused, then set VM.power_state to Paused

- If domain is to be started running, unpause domain and set VM.power_state to Running

- Unlock the VM.